In machine learning, the main goal is to create models that work well on the data they have been trained and on data they have never seen before. Management Compromise of distortion It becomes important because it is a key element that explains that models may not work well on new data.

Improving the performance of distortion of the model understanding in relation to machine learning, deviation of the part plays in predictions and how these two elements interact. Knowledge of this concept explains why models may seem simple, too complicated or almost right.

The guide brings a comprehensive theme of compromise distortion to a level that is understandable and accessible. Whether you are a beginner in the field or want to move your most advanced models to the next level, you will receive a practical advice that narrows the gap between theory and results.

Introduction: The nature of predictive mistakes

It is important to understand before immersion in specifics Two main contributors to a prediction error In the supervised teaching tasks:

- Bias: Error due to erroneous or too simplified assumption in learning algorithm.

- Dispersion: Error due to sensitivity to small fluctuations in the training set.

We will also be satisfied with them Faultwhich is noise to own data and cannot be alleviated by any model.

The expected total model error on invisible data can be mathematically distributed as:

Expected error = distortion^2 + dispersion + non -removable error

This decomposition supports the framework of bias and deviations and serves as a compass to select and optimize the model.

Do you want to take your skills further? Join Science about data and machine learning with Python Rasu and get practices with advanced techniques, projects and mentoring.

What is the bias in machine learning?

The bias represents a peace into which the model systematically deviates from the actual function it closer. It comes from restrictive claims stored by an algorithm that can overflow the basic data structure.

Technical definition:

In the statistical context, bias There is a difference between experimental (or average) model predictance and real target variable value.

Common causes of high distortion:

- Overs Implified Models (eg linear regression for non -linear data)

- Insufficient duration of training

- Limited function or irrelevant representation of gold functions

- Insufficient parameterization

Consequences:

- High training and test errors

- The inability to capture meaningful formulas

- Underfitting

Example:

Imagine using a simple linear model to predict real estate prices based only on square shots. If the actual prices also depend on the location, age of the house and the number of rooms, the model regulations are too narrow, which results in High distortion.

What is the scattering in machine learning?

The scattering reflects the model’s sensitivity to specific examples used in training. High dispersion model learns noise and details in training data for such assets that it is poorly implemented on new, invisible data.

Technical definition:

Dispersion It is the variability of model predictions for a given data point when different data sets are used.

Normal causes of high scattering:

- Highly flexible models (eg deep neural network without regularization)

- Crowded due to limited training data

- Excessive complexity of elements

- Insufficient generalization control

Consequences:

- Very low training error

- A high test error

- Rewrite

Example:

Training data can be remembered by the decision -making tree without a depth limit. When evaluating the test kit, its performance plums due to learned noise classic Scatter Behavior.

Party vs. Dispersion: Comparative analysis

Understanding the contrast between distortion and scattering helps the diagnostic model of behavior and leads improvement strategy.

| Criteria | Bias | Dispersion |

| Definition | Error due to incorrect assumptions | Error due to sensitivity to data changes |

| Model behavior | Underfitting | Rewrite |

| Error training | High | Low |

| Error | High | High |

| Type model | Simple (eg linear models) | Complex (eg deep nets, full trees) |

| Strategy correction | Increase the complexity of the model | Use regularization, reduce the complexity |

Explore the difference between the two in this manual Turbocharging and insufficiently dissecting in machine learning And how they affect the performance of the model.

Compromise distortion in machine learning

Tea Compromise of distortion It encapsulates inherent voltage between insufficient and overcrowding. Improvement of one often worsens the other. The goal is not to eliminate both, but to Find a sweet place Where the model reaches a minimum generalization error.

Key insight:

- Reducing distortion usually involves increasing the complexity of the model.

- Reduction of scattering often requires simplification of the model or storage of restrictions.

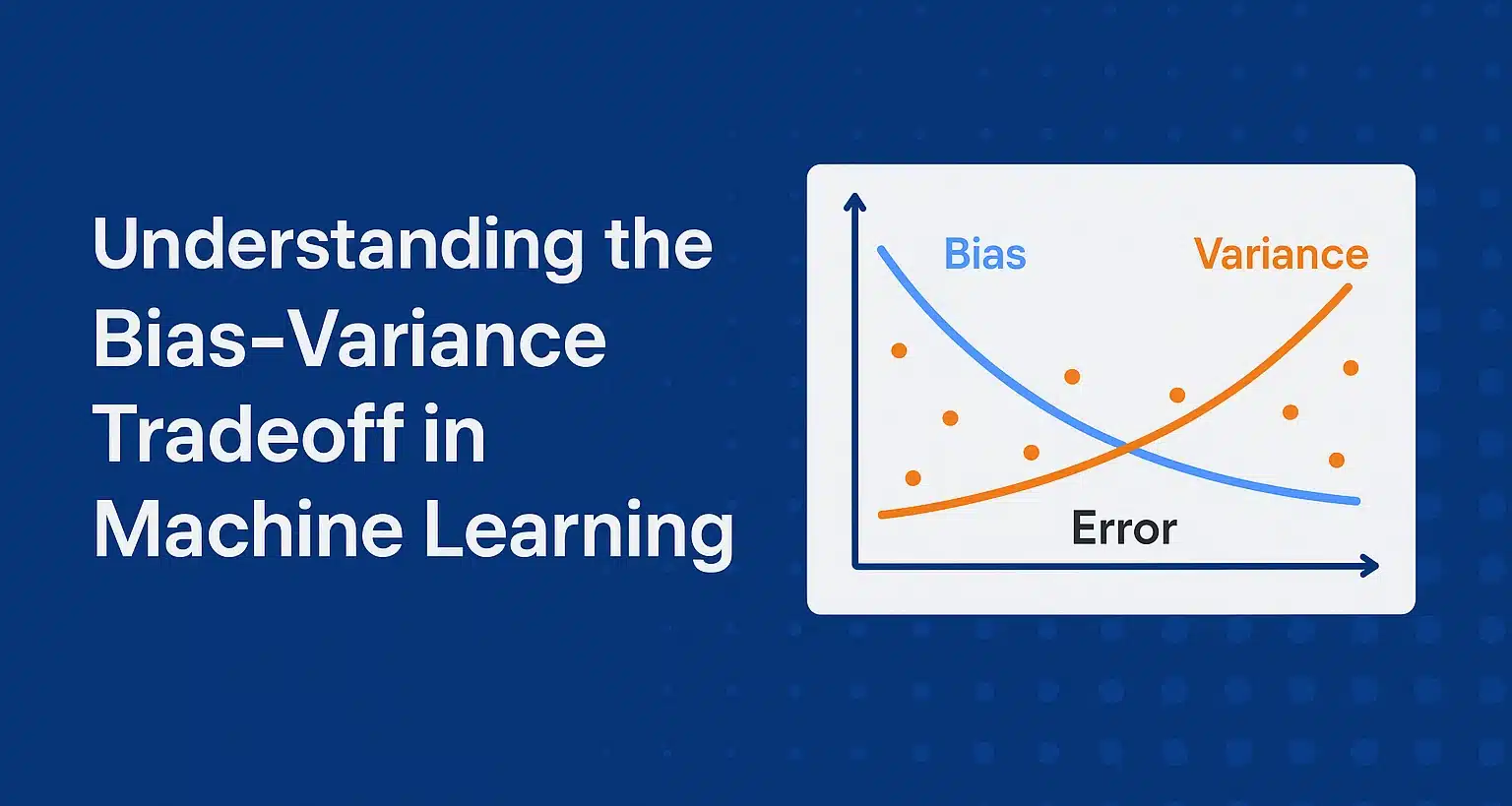

Visual understanding:

Imagine the complexity of the XA -axis rendering model. Initially, as the complexity increases, it orders distortion. To some extent, however, the error caused by the scattering begins to develop. Point Total Minimum Error Lies between these extremes.

Strategy for Balancing Distortion and Scattering

Balance of distortion and scattering requires intentional control of model design, data management and training methodology. Below are key techniques used by experts:

1. Model selection

- A simple species when the data is limited.

- If sufficient high -quality data is available, use complex models.

- Example: Use logistics regression for binary classification task with limited functions; Help CNN or transformers for image/text data.

2. Regularization

3. Cross validation

- K-Fold or stratified cross validation provides a reliable estimate of how well the model will work on invisible data.

- It helps to detect the scattering earlier.

Learn how to log in K-Fold Cross Verification If you want to get a more reliable picture of the actual performance of your model across different data distribution.

4.

- Like the techniques of bagging (eg accidental chairmen), they reduce the scattering.

- Increasing (eg XGBOOST) gradually decreases.

Reading: Explore Bagging and strengthening For better model performance.

5. Expand the training data

- High dispersion models benefit from multiple data, helping them to generalize them better.

- It is commonly used by techniques such as increasing data (for images) or generating synthetic data (via Smote or Gan).

Applications and consequences in the real world

The compromise of distortion of deviation is not only academic that directly affects performance in real ML:

- Detection: High distortion may miss complex fraud formulas; A high scattering can indicate normal behavior as a scam.

- Medical diagnosis: Model with high distortion could ignore the symptoms of nuances; Models with high variations can change predictions with minor changes in patient data.

- Recumind Systems: Setting up the right balance ensures that these are suggestions without switching to the behavior of past users.

Common pitfalls and misconceptions

- Legendary: More complicated models are always better if they introduce a high scattering.

- Abuse of metric verification: Relying only on the accuracy of training leads to a false feeling of model quality.

- Ignoring learning curves: Rendering vs. training Verification errors over time reveal valuable knowledge that the model suffers from distortion or scattering.

Conclusion

Tea Compromise of distortion It is the cornerstone of the evaluation and tuning of the model. Models with high distortion are too simplified to capture the complexity of data, while high -scatter models are too sensitive to it. The art of machine learning consists in efficient control of this compromise, selecting the right model, regulating applications, strict verification and feeding the algorithm with quality data.

Deep understanding bias and scattering in machine learning Allow experts to build models that are only accurate, bullshit, scalable and robust in the production environment.

If you are a newcomer in this concept or want to strengthen your foundations, explore this free race on the compromise of bias and deviations to see examples in the real world and learn how to effectively balance your models.

Frequently asked questions (FAQ)

1. Can the model have both high bias and high dispersion?

Yes. For example, a model trained in noisy or poorly marked data with insufficient architecture can simultaneously stimuling and transforming in different ways.

2. How does it affect the selection of distortion and scattering functions?

The selection of functions can reduce the scattering by removing irrelevant or noisy variables, but if informative features are removed, it can attract distortion.

3. Does the growing data on distortion or scattering reduce?

Primary, reduces the scattering. However, if the model is fundamentally too simple, the distortion persists without data size.

4. How do the methods together help with compromise of distortion?

Bagging reduces the scattering by averaging predictions, while strengthening helps to reduce distortion by a combination of weak students of Sequedentilly.

5. What role of play validation plays in the control of bias and scattering?

Cross validation provides a robust mechanism for evaluating the performance of the model and detects where errors are caused by distortion or scattering.