The process of discovering molecules that are in order is to create new medicines and materials, is cumbersome and expensive, consuming huge computational resources and months of human work to narrow the huge space of potential candidates.

Large language models (LLM), such as Chatgpt, would make this process more efficient, but allow LLM to understand and the reason for atoms and ties that make up the molecule, in the same way as the word that creates, was a scientific strike.

MIT and MIT-IBM scientists have created a promising approach that extends LLM with other machine learning models known as graph-based models that are specially designed for generating and predicting molecular structures.

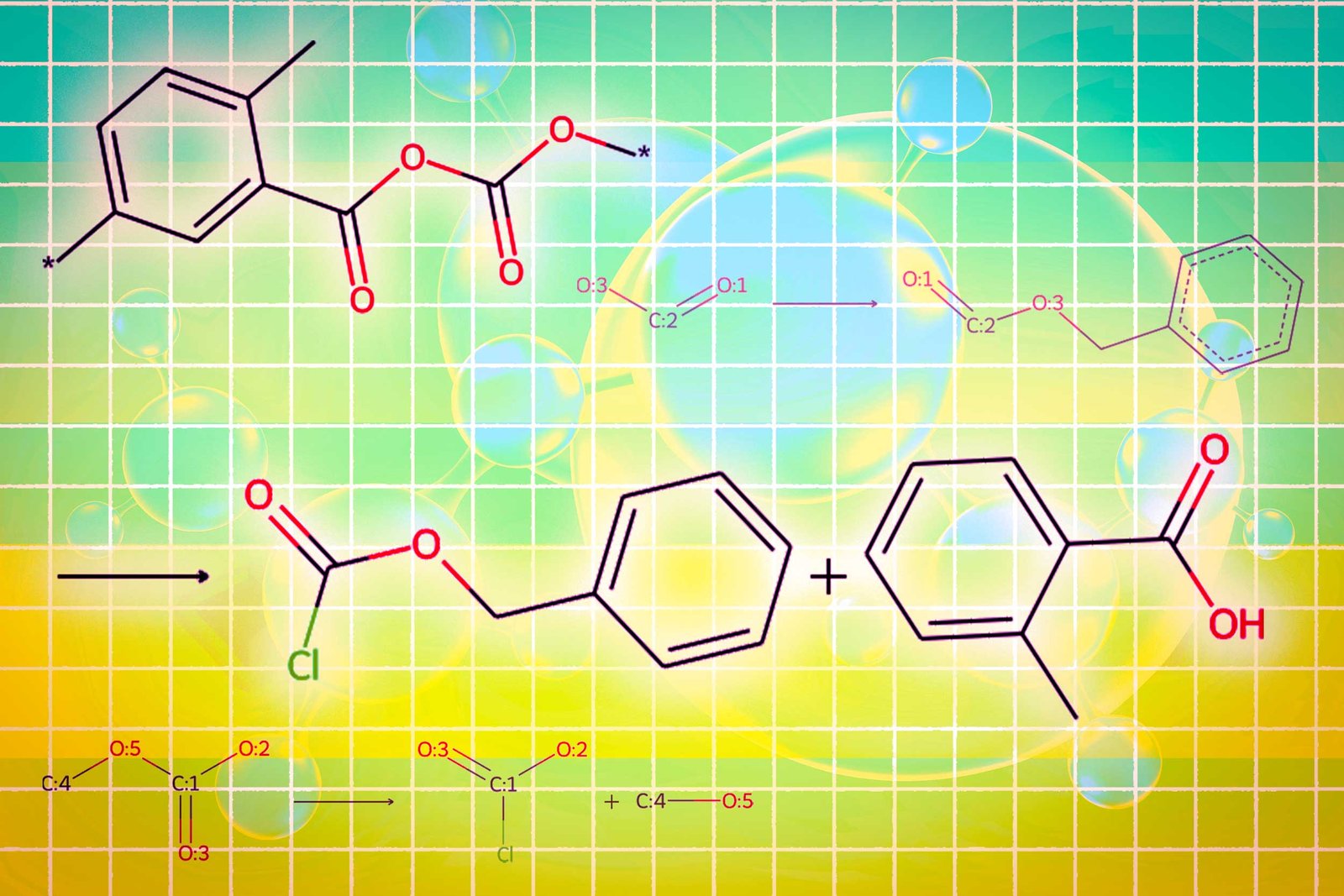

Their method uses LLM based on the interpretation of natural language queries specifying the required molecular properties. It automatically switches between the basic AI -based modules and the graphs for the design of the molecule, explains the justification and generates step by step synthesizes it. It interleaves the generation of text, graph and step of the synthesis, combines words, graphs and reactions to common vocabulary for LLM consumption.

Compared to existing LLM -based approaches, this multimodal technique generated molecules that better corresponded to the user special and more often had a valid synthesis plan, which improved the ratio of success from 5 Pierge to 35 Piernt.

He has also overcome LLM, which is more than 10 times higher and that design and synthesis molecules only with text representations, suggesting that multimodality is the key to the success of the new system.

“Hopefully, this could be the end of the end, where we could automate the entire design and molecule manufacturer from start to finish. If LLM could answer in a few seconds, it would be a huge time for pharmaceutical companies.

Among the co -authors of the Sun includes the main author of Gang Liu, a postgraduate student at the University of Notre Dame; Wojciech Matusik, Professor of Electrical Engineering and Informatics at MIT, which leads computing design and production group within computer science and artificial intelligence laboratory (CSail); Meng Jiang, Associate Professor at the University of Notre Dame; And the head of Jie Chen, head of scientist and manager in the MIT-IBM Watson AI laboratory. The research will be presented at an international conference on learning representations.

The best of both worlds

Large language models are not built to understand the nuances of chemistry, which is one of the reasons why they fight inverse molecular design, the process of identifying molecular structures that have certain functions or correct.

LLMs convert text to representations called tokens that they use for the sequence prediction of another word in the sentence. However, molecules are “graph structures” that consist of atoms and bonds without a specific arrangement, which is difficult to encode as a sequential text.

On the other hand, powerful AI -based models renew atoms and molecular links such as interconnected nodes and edges in the graph. Although these models are popular for reverse molecular design, they require complex inputs, cannot understand natural language and bring results that are difficult to interpret.

MIT scientists combined LLM with AI graphics models into a unified framework that gains the best of both worlds.

Llamiii, which means a model of a large language for a molecular discovery, used LLM as a guard to understand the user-friendly question for an ordinary langae about a molecule with certain proper.

For example, maybe and uses a molecule that can penetrate the pratgal barrier and inhibit HIV, given that it has a molecular weight of 209 and some bonds.

As LLM predicts the text in the query, it switches between the graph modules.

One module uses a graph of a graph diffusion to generate a molecular state involved in the input requirements. The second module uses the neural network GRAP to eco -generated molecular structure back to tokens to consume LLMS. The final graphic module is a predictor of reaction graphs, which as enters the intermediate molecular structure and predicts the reaction step and is looking for a precise set of steps to make the molecule from the basic building blocks.

Scientists have created a new type of trigger token, which says LLM, when to activate every module. When LLM predicts the “design” trigger token, it switches to a module that outlines the molecular structure, and when it predicts the “retro” trigger token, it switch to a retrosynthetic planning module that predicts another reaction step.

“The beauty is that everything that generates LLM before activation of a particular module is scolded by this module itself. The module learns to function in a way that consists of what came before,” Sun says.

Likewise, the output of each module is coded and brought back to the LLM generation process, so it understands what each module did and will continue to predict tokens based on these data.

A better, simpler molecular structure

Finally, Llalalole publishes the image of the molecular structure, the text description of the molecule and the plan of the synthesis of the Semen-Sep synthesis, which provides details of how to do it, except for individual railway reactions.

In an experiment involving the design of molecules that corresponded to users, Llamiii exceeded 10 standard LLM, four finely tuned LLMs and state -of -the -art domain -specific methods. At the same time, this increased the rate of retrosynthetic planning from 5 piercing to 35 pierients by generating molecules that are of high quality, which means they had simple structures and cheap building blocks.

“LLMS itself is trying to figure out how to synthesize molecules because it requires a lot of multi -stage planning. Our method can generate better molecular structures that also synthesize,” says Liu.

For training and evaluating Llamiiius, scientists have created two data sets from the sale of throttle sale of the existing data set of molecular structures did not contain enough details. They spread the hanging thousands of patented molecules with descriptions of ai-generated natural language and adapted description template.

The data file that creates to fine -tune LLM includes templates related to 10 molecular burglary, so that one limitation of Llallo is that it is trained to design molecules with respect to only these 10 numbers.

In future work, scientists want to generalize Llallo to include any molecular feature. In addition, they plan to improve graphs to increase Llamiio’s retrosynthesis.

And in the long run, they hope to use this approach to exceed molecules and create a multimodal LLM that can handle other types of graph -based data, such as connected sensors in the energy network or financial market transactions.

“Llamiiola shows the feasibility of using large language models as an interface to comprehensive data outside the text description and we expect to be the basis that interacts with other AI algorithms to solve any graph problems,” says Chen.

This research is partly financed by MIT-IBM Watson AI Lab, National Science Foundation and Maritime Research.

(Tagstotranslate) Gang Liu