When we launched Amazon SageMaker AI in 2017, we had a clear mission: to put machine learning in the hands of any developer, regardless of their skill level. We wanted infrastructure engineers who were “complete machine learning noobs” to be able to achieve meaningful results in a week. Remove barriers that made ML accessible only to a select few with deep expertise.

Eight years later, that mission has evolved. Today’s ML developers don’t just train simple models—they build generative AI applications that require massive computation, complex infrastructure, and sophisticated tools. The problems keep getting harder, but our mission remains the same: to eliminate undifferentiated heavy lifting so builders can focus on what matters most. In the last year, I’ve met customers who are doing incredible work with generative AI—training massive models, fine-tuning for specific use cases, building apps that would have seemed like science fiction just a few years ago. But in these conversations, I hear about the same frustrations. Solution. Impossible choices. Time wasted on what should be troubleshooting. A few weeks ago, we released several features that address these friction points: securely enabling remote connections to SageMaker AI, end-to-end observability for developing large-scale models, deploying models to your existing HyperPod, and resiliency training for Kubernetes workloads. Let me walk you through them.

Tax solution

Here’s a problem I didn’t expect to still be facing in 2025 – developers have to choose between their preferred development environment and access to powerful computing.

I spoke with a customer who described what they called the “SSH bypass tax” – the time and cost of complexity of trying to connect their local development tools to SageMaker’s AI computation. They built this elaborate system of SSH tunnels and port forwarding that kind of worked until it didn’t. When we switched from classic to the latest version of SageMaker Studio, their solution completely broke. They had to make a choice: abandon their carefully customized VS Code setup with all its extensions and workflows, or lose access to the computations they needed for their ML workloads.

Developers shouldn’t have to choose between their development tools and cloud computing. It’s like being forced to choose between electricity and running water in a house – both are essential and the choice itself is the problem.

The technical challenge was interesting. SageMaker Studio spaces are isolated managed environments with their own security model and lifecycle. How do you securely tunnel IDE connections through AWS infrastructure without exposing credentials or requiring customers to become network experts? The solution had to work for different types of users – some wanted one-click access directly from SageMaker Studio, others preferred to start their day in their local IDE and manage all their sites from there. We needed to improve the work that was done for SageMaker SSH Helper.

So we built a new StartSession API that creates secure connections specifically for SageMaker AI premises and creates SSH-over-SSM tunnels through AWS Systems Manager that maintain all SageMaker AI security boundaries while providing seamless access. For VS Code users coming from Studio, the authentication context is carried over automatically. For those who want their local IDE as the primary entry point, administrators can provide local credentials that work through the AWS Toolkit VS Code plugin. Most importantly, the system handles network outages gracefully and reconnects automatically, because we know builders hate losing work when connections go down.

This solved the number one SageMaker AI feature request, but as we dug deeper into what was slowing down ML teams, we found that the same pattern was playing out on an even larger scale in the infrastructure that supports model training itself.

The Paradox of Observability

The second problem is what I call the “observability paradox”. A system designed to prevent problems itself becomes a source of problems.

When you’re training, tuning, or inferring tasks on hundreds or thousands of GPUs, failures are inevitable. The hardware is overheating. Network connections stop working. Memory will be corrupted. The question isn’t whether problems will occur—it’s whether you’ll catch them before they spiral into catastrophic failures that waste days of precious computing time.

To monitor these massive clusters, teams deploy observability systems that collect metrics from every GPU, every network interface, every storage device. But the monitoring system itself becomes a performance bottleneck. Self-service collectors run into CPU limitations and cannot keep up with scale. Monitoring agents fill up disk space and cause the very training failures they are supposed to prevent.

I’ve seen teams training the base model on hundreds of instances experience cascading failures that could have been avoided. A few overheating GPUs will trigger thermal throttling, reducing the entire distributed training task. Network interfaces will start dropping packets under increased load. What should be a minor hardware issue becomes a multi-day investigation across fragmented monitoring systems while expensive computing sits idle.

When things go wrong, data scientists become detectives, piecing together clues across fragmented tools—CloudWatch for containers, custom dashboards for GPUs, network monitors for interconnects. Each tool shows a piece of the puzzle, but it takes days to manually correlate them.

This was one of those situations where we saw customers doing work that had nothing to do with the real business problems they were trying to solve. So we asked ourselves: how do you build an observable infrastructure that scales with massive AI workloads without becoming the bottleneck it’s meant to prevent?

The solution we created fundamentally rethinks the observability architecture. Instead of single-threaded collectors that struggled to handle metrics from thousands of GPUs, we implemented auto-scaling of collectors that grow and shrink with workload. The system automatically correlates high-cardinality metrics generated in HyperPod using algorithms designed for massive time series data. It not only detects binary failures, but also what we call gray failures – partial, intermittent problems that are hard to detect but slowly degrade performance. Imagine GPUs automatically slowing down due to overheating, or network interfaces dropping packets under load. And you get all of this out of the box, in a single dashboard based on our experience in training GPU clusters at scale – with no configuration required.

Teams that previously spent days identifying, investigating, and remediating task performance issues now identify root causes in minutes. Instead of reactively troubleshooting after a failure, they receive proactive alerts when performance begins to decline.

Composite effect

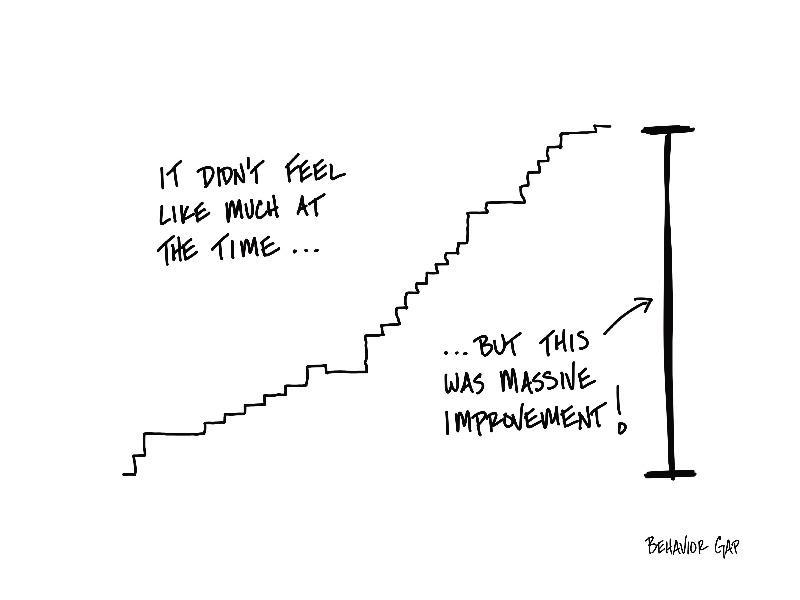

What strikes me about these problems is how they stack up in ways that aren’t immediately obvious. The tax on SSH solutions doesn’t just cost time—it discourages the rapid experimentation that leads to breakthroughs. When it takes hours instead of minutes to set up your development environment, you’re less likely to try that new approach or test a different architecture.

The observability paradox creates a similar psychological barrier. When infrastructure issues take days to diagnose, teams become conservative. They stick to smaller, safer experiments rather than push the boundaries of what’s possible. They over-provision resources to avoid failures instead of optimizing efficiency. Infrastructure friction becomes innovation friction.

But those aren’t the only friction points we’ve worked to eliminate. In my experience building distributed systems at scale, one of the most enduring challenges has been the artificial boundaries we create between different stages of the machine learning lifecycle—organizations maintaining separate infrastructure for training models and serving them in production, a pattern that made sense when these workloads had fundamentally different characteristics, but which became increasingly inefficient as both converged on similar computational requirements. With the new deployment capabilities of SageMaker HyperPod models, we remove this boundary entirely, allowing you to train your base models on a cluster and instantly deploy them to the same infrastructure, maximizing resource utilization while reducing the operational complexity that comes from managing multiple environments.

For teams using Kubernetes, we’ve added a HyperPod training operator that brings significant improvements to error recovery. When a failure occurs, it restarts only the affected resources, not the entire job. The operator also monitors common training issues such as stopped doses and non-numeric loss values. Teams can define custom recovery policies through direct YAML configurations. These capabilities dramatically reduce resource waste and operational overhead.

These updates—securely enabling remote connections, automatically scaling observability collectors, seamlessly deploying models from training environments, and improving error recovery—work together to address friction points that prevent developers from focusing on what matters most: building better AI applications. When you remove these friction points, you not only speed up existing workflows; you will enable completely new ways of working.

This continues the evolution of our original SageMaker AI vision. Each step forward brings us closer to the goal of putting machine learning in the hands of any developer with as little undifferentiated burden as possible.

Now get building!